The coexistence of visible (VS) and infrared (IR) cameras has opened new perspectives for the development of multimodal systems. In general, visible and infrared cameras are used as complementary sensors in applications such as video surveillance and driver assistance systems. Visible cameras provide information at diurnal scenarios while infrared cameras are used as night vision sensors.

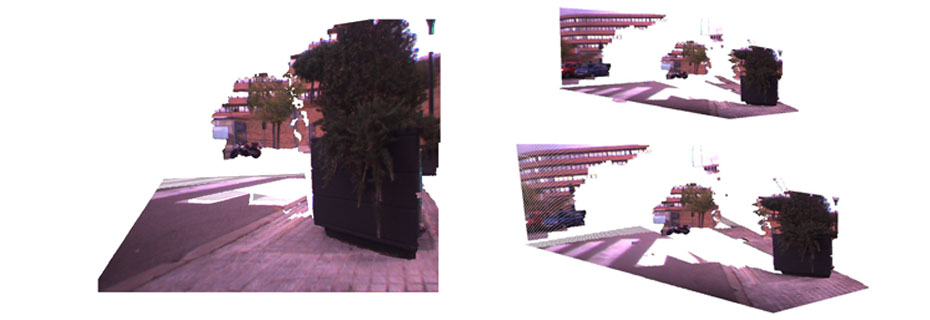

The approaches mentioned above involve registration and fusion stages, resulting in an image that even though contains several channels of information lies in the 2D space. The current work goes beyond classical registration and fusion schemes by formulating the following question: “is it possible to obtain 3D information from a multimodal stereo rig?”. It is clear that if the objective is to obtain depth maps close to state-of-the-art, classical binocular stereo systems (VS/VS) are more appropriated. Therefore, the motivation of current work is to show that the generation of 3D information from images belonging to different spectral bands is possible.

The role of cameras in the proposed multimodal stereo system is not only restricted to work in a complementary way (as it is traditionally) but also in a cooperative fashion, being able to extract 3D information. This challenge represents a step forward in the state-of-the-art of 3D multispectral community, and results obtained from this research will benefit applications in different domains (i.e., where the detection of an object of interest can be enriched with an estimation of its aspect or distance from the cameras).